So. AI.

I could just join the chorus of voices opposing it in every way, shape, and form. Or I could throw morals to the wayside and hop on the grift train. Perhaps against all evidence, I refuse to believe that nuance is dead, so here’s a long, rambling post where I try to hash out where I stand. It’s been a long time coming, and I’m not sure how good my timing is, but this is a subject where I wanted to get my thoughts into at least a semblance of order first.

This is mostly from the perspective of a creator, focusing on AI as a creative tool (I debated throwing air quotes around some of those terms), but I will touch on some generalizations, especially in the last few paragraphs.

I am expecting this post to piss everyone off at least a little bit, perhaps even more so than my explicitly political posts. I’m going to be more charitable to AI technology and its use than a lot of creators, but on the other hand, AI bros are really not going to be happy with some of the things I have to say. I humbly ask you to read to the very end, whatever your perspective may be, and keep in mind that this post is about sorting out my own feelings as much as anything, and is far from a well-researched and thoroughly-refined dissertation.

A confession

I’ve been playing with AI here and there since 2023.

Okay, that’s not really a secret. I’ve posted a couple projects on itch with the dreaded generative content box checked, I’ve mentioned playing with AI offhand on Bluesky and on my blog. I may or may not have briefly posted slop AI fanfics under an alt.

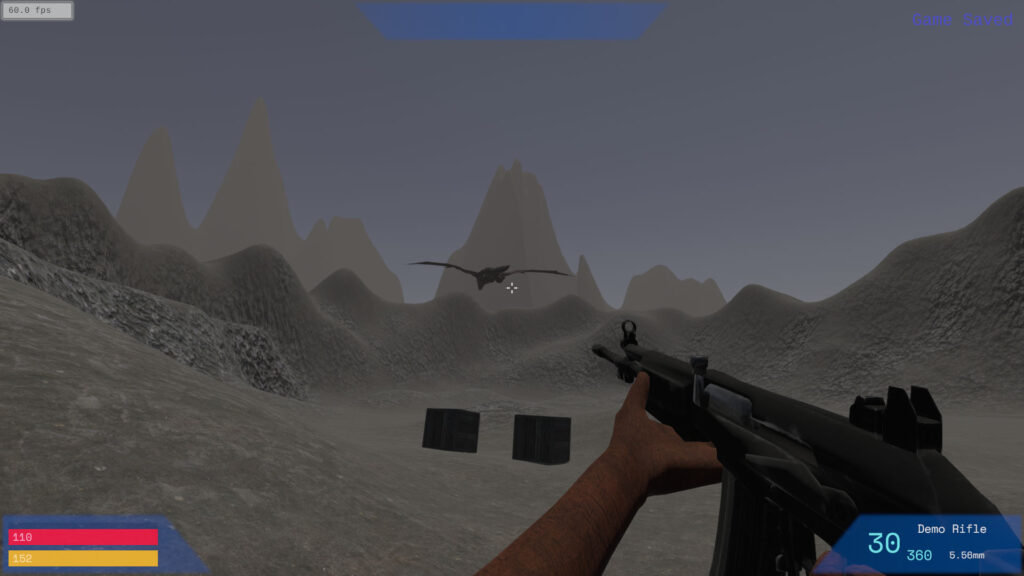

You won’t get blindsided by AI content from me, mind. I’ve been strongly against misrepresenting AI output as anything else from the beginning, and I decided early on to strictly separate projects with AI from the other ones. In the end I only made a few, one of which was semi-ephemeral. Shattered 3 is a bit of an odd case in that I never made a decision before shelving it, and the final prototype has both AI and AI-free versions.

Here’s my first hot take of the post: Everyone should experiment with AI, at least a little bit. Even if you’re ardently against it in every way, shape, and form, remember what Sun Tzu said about knowing your enemy. I was deeply unnerved by generative AI until I poked the proverbial bear and experienced what it was like to actually use.

Spoiler alert: the shine wears off fast.

Know thy enemy

Unlike some of my friends, who were very excited about the new possibilities, I was a doomer about AI early on. My mind went to the worst possible scenarios, where all creative output is replaced with generated noise, all art replaced with synthetic simulacra. A future exactly the opposite of what automation has promised us from the beginning, where the fulfilling, creative pursuits are handled by machines and humans are relegated to menial labour. I avoided AI like the plague for a long time, resisting its siren song of a quick dopamine hit, but I poked at image generation at one point, and eventually started toying with ChatGPT.

There’s an old adage that the cure for fear is knowledge.

Once I started playing with it, though I remained cynical of the technology, the fear of a worst-case scenario diminished greatly. Part of that is because it’s simply not as good as its proponents insist; there are limitations and problems that have not been solved in the intervening years and may never be solved. For every demonstration of lifelike video generated or brilliant explanation delivered, there are a thousand examples of impossible hands and instructions on how to eat rocks.

But I think another part of it was how I was characterizing it in my own head. I was personifying it, and unusually (at least for me), the more I used it the better I understood it to be unthinking and unfeeling, and the less I personified it.

AI is many things, good or bad, but at the end of the day it is a tool, not a thinking, feeling agent with a will of its own. It may be a drain on society, but that is because of how it is developed and used, a consequence of the human interactions with the technology and the organic forces driving them. It is at the end of the day a hammer wielded by human hands, and it only has the power that we choose to give it.

We’re not dealing with SHODAN or GLaDOS here. At the very worst, we are dealing with a new tool with potential for evil, and that is a very familiar- and very human- opponent to face.

The temptations

Hot take number two: I don’t agree with the notion that no creative would ever want to use AI. I’ve heard a sentiment along the lines of “why would you want to delegate doing what you love to the machine” thrown around, and I have several real answers to that rhetorical question. I’m not arguing these to be adequate justifications. I’m calling these the temptations for a reason, and I think imagining a little devil whispering over your shoulder sets the tone just about perfectly.

The Tedious Bullshit. Not every part of every creative process is enjoyable to everyone. Implementing game logic is a lot more fun than writing its documentation. Maybe you just personally hate the inking step or hate writing travel scenes. Or you love designing characters, but animating 30 frames of them is dragging you down. It’d sure be nice if someone- or something– could take care of those for you.

The Efficiency Grindset. We’d all love to give each and everyone one of our projects all the time they deserve, but alas, the creative process must intersect with the real world. Unless you’re top in your field (and perhaps even then), deadlines, opportunity cost, churn and burnout limit how much we can put into each work. So why not fill in a chapter with paragraphs from an LLM, or generate a few backgrounds for your visual novel to save you the time of drawing them?

The Skill Shortcut. Learning to do it yourself isn’t a free action- even if it’s free to play, it takes a lot of time to master a skill, time you may or may not actually have. But what if you didn’t have to? What if something could step in and let you skip that whole process?

The Resource Gap. There’s only so much one can do alone. If you don’t have the complete skillset for a complex project, or if it’s just too big for one person on a sane timescale, just wanting to make it happen won’t make it a reality. If you had the money, maybe you could hire someone, or if you had the charm, bring in a collaborator. You don’t, but what about one that works for free (or close enough to it) and doesn’t need to be convinced?

The Muse. It can be tough finding a space to discuss your work, especially if you’re isolated physically or metaphorically, or if your friends aren’t similarly inclined. And online spaces can be really toxic now, especially in fandom. How about a friendly voice that always answers and never judges?

I don’t think this list is exhaustive, and there’s a fair bit of overlap, but these are the ones that struck me specifically. All of them are responses to specific frustrations I’ve felt, and I think for the most part they’re pretty common ones. In an ideal world, the temptations wouldn’t be relevant, but we are not spherical cows in a vacuum. There are 24 hours in a day, bills to pay, and all too often we have to make tradeoffs and choices we wish we could have avoided.

A conundrum

FunkyFrogBait did a video about AI… six, seven months ago? Wow, time flies. Although I don’t fully agree with every point in it, it’s a hilarious video with some surprisingly strong arguments and they’re a great storyteller, so I highly suggest checking it out.

There’s one philosophical point in it that’s stuck with me. I don’t remember the exact phrasing, but it basically amounts to: If something can only be created with the help of AI, should it be created at all?

I don’t have an answer to that question.

I think it’s easy to say “of course not” as an abstract outsider with no stake in the idea. I think it’d be a lot harder for an empathetic person to, face to face, shut someone down who’s really excited about the thing they want to make and that there’s this new technology that lets them do it.

I have been that person. There are a thousand and one things I’d love to make that I don’t have the skills, knowledge, or resources to do. There are so many ideas I’d like to turn into real works that I know can never be.

I spent half a decade trying anyway, reaching for the skies with moonshot projects that weren’t realistic for someone with my ability and resources. I used every trick I knew, took every shortcut I could. If generative AI had existed at the time, I probably would have leveraged it as much as possible. Would the end product have been any good? Could it have justified to any extent the way in which it was made?

I don’t know, I never got there. I don’t have an answer here. If you equate the AI of today to pirating commercial assets or ripping resources out of commercial games, would I have been okay with a project built that way? All I can give is a wishy-washy answer that it depends on the context, is it being sold for money, how is it represented, is it transformative. But I never crossed that bridge myself.

For my part, I’ve made my peace with the impossible projects that will never be… mostly. I pulled the plug on the massive moonshot project in 2021, moved away from bigger projects over the next few years, and eventually started winding down projects I’d classed as difficult but achievable to preserve my own sanity. I’m in a much better place overall now, but I’d be lying if I said the feeling of longing was totally gone.

Turning away

I could go on about the environmental and infrastructural impacts of massive datacenters, the copyright concerns around training sets, or the corporate practices of the AI juggernauts themselves. Those are all big problems that should give any sane person pause, and others have written far more about this and far better than I could include here.

Much cooler take: While everyone should try AI, almost no one should actually use it in production.

I don’t think these problems are unsolvable, but it’s abundantly clear that the companies and individuals at the forefront of the field have no interest in even trying. The notion of ethical AI died some time in 2024, and now it’s a race to… grab the biggest slice of the pie before the bubble bursts, probably. Given that AI investment is basically propping up the world economy at this point, that’s going to be fun when it happens.

What ended my experiments with AI? In the end I just kind of… got bored with it. I think I had more fun probing the limits than working within them once I found them. The novelty of image generation wore off quickly, and LLMs not long after. There are too many issues to use it in production, and as an initially fun toy it turned repetitive and dull quickly.

Image generation initially had a huge wow factor to it, but I found it almost impossible to get a result that matched what I actually had in mind. Maybe I just suck at prompting, but the process is the problem: it’s not like creating your own art or working with a human artist. There’s no discussions, early sketches, or rough drafts where you refine the ideas before committing to the final piece. You prompt, generate, and either take the best result as “good enough” or start over from step one. Even inpainting doesn’t change that much, just gives you the option of swapping out part of the end product wholesale.

The chatbots-on-steroids I had a little more fun with before completely disengaging. I call them chatbots partially out of derision, but also because they still do have the feel of the pre-LLM chatbots of old. Very rigid and formulaic in their answers, strongly reflecting cultural biases and rarely straying from safe averages, but at the same time wildly unpredictable, sometimes giving completely different answers to the same question if worded slightly differently or utterly ignoring important points you’d just told it to consider. You can generate a lot of text quickly, but it all reads the same and you get bored of it quick. If you try to use it for worldbuilding or other idea crafting, you get something that feels very derivative without unique flavour.

What could(n’t) have been

I’m disappointed in how things turned out because AI-driven tools could have been useful to my workflow, and I think many others as well. While I’m not a fan of replacing the process start to finish, I do think more scope-limited tools could be useful (or at least could have been useful) as assists to the creative process.

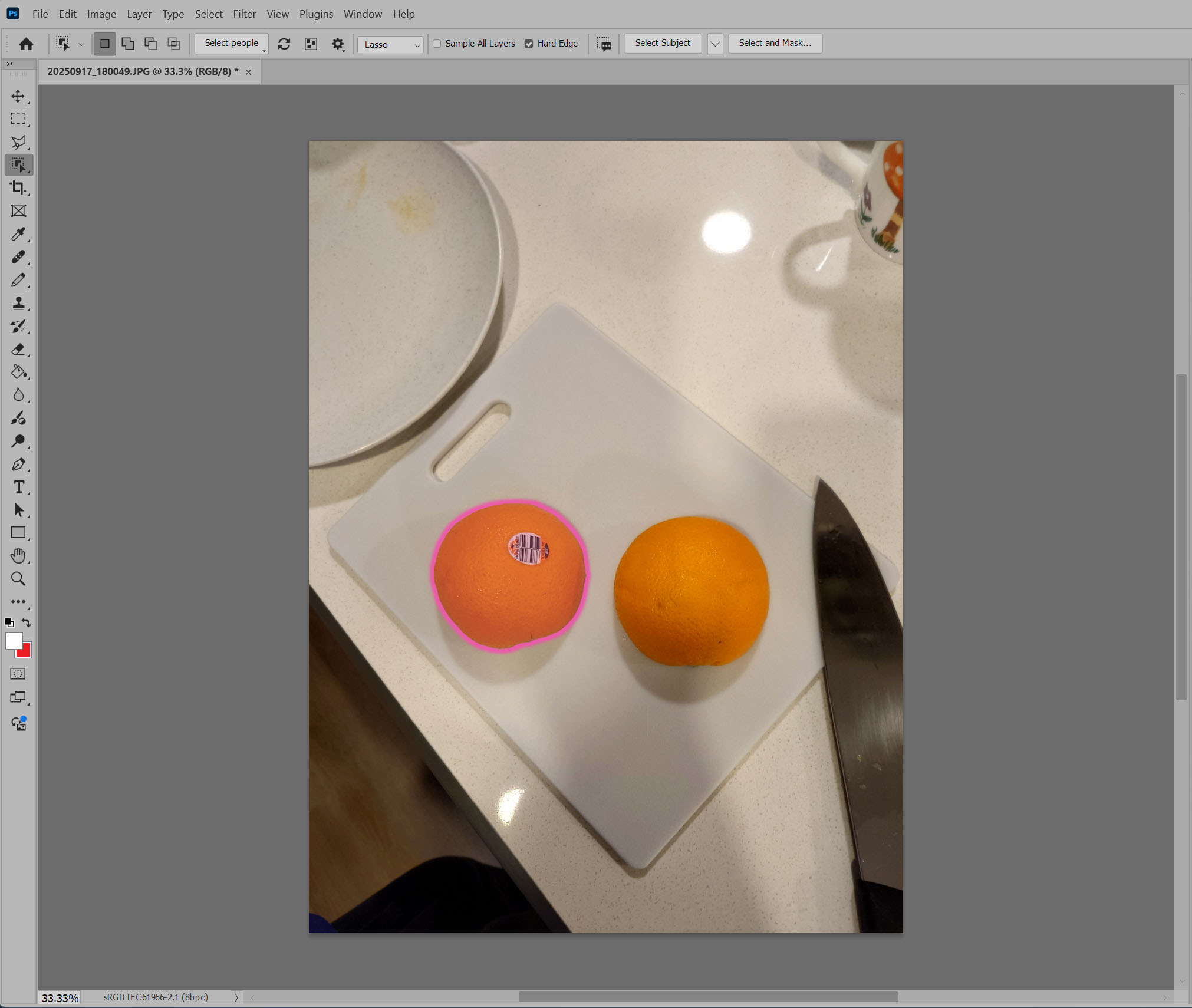

Generative erase, replace, and extend are faster, easier, and often more effective (at least in amateur hands) than trying to manually cut and stitch. ChatGPT gives inane responses half the time, but is a surprisingly good rubber duck for hashing out ideas. Gemini answers for code questions are a mixed bag overall, but usually work as a “try the simplest thing that could possibly work” starting point.

I would never want to replace the entire creative process, or even major steps, with any of these. Most of them I wish worked a little better. But I could find utility in them even in their current state, and I could glimpse the potential in the proverbial AI copilot. I think some- not all, but some- AI-driven tools can be genuinely helpful to creators in many different fields.

At this point, though, the well is thoroughly poisoned. The leading AI vendors feel like they’re vying for the title of “worst company you can name”- you know it’s bad when Adobe looks like the good guy. Anything related to AI is heavily stigmatized in the creative world- and given all the concerns around it and how AI stans push it, I can hardly blame anyone for never giving the benefit of the doubt.

It’s also increasingly clear this point that “if it worked a little better” is a much harder ask than it sounds, and we’re well into diminishing returns. There’s no breakthrough around the corner, just throwing increasingly unsustainable amounts of computing power at the problem in the hopes that it’ll somehow work out. In some cases, we’re seeing regressions from attempts to cut ballooning costs and from training sets themselves full of AI noise.

(It’s also pretty clear at this point that there’s no business model that’s going to work for most of these services, but I’m happy calling that someone else’s problem.)

What’s old is new again

As crazy as it sounds, I actually agree with the AI bros on some level. I do believe there is a future where AI represents a net benefit to society.

We did not get that future.

And if anything, the same people who promised it to us are the very same ones who are trying their best to make sure we never will.

It feels like blockchain all over again. A very interesting technology, immediately used for the most cynical purpose imaginable to the point of being basically synonymous with it, pushed hard by careless people for selfish reasons. A popular buzzword at first that turned sour over time until it was associated with actual scams. Initial excitement even from the stodgiest of industries and most conservative of organizations, turning eventually to wholesale rejection. And, by some, a melancholic longing for what could have been.

AI is a very overloaded buzzword at this point, encompassing a wide range of barely related technologies. Like a lot of other people, I’ve been using it almost as a synonym for the latest generative AIs, but everything from power management algorithms to machine vision processing has had the AI term slapped on it. As the AI buzzword picks up negative connotations by association, I think we’ll see a lot of “AI” tech rebranded.

There will be survivors when the bubble pops, and hopefully some of them will be the positive forces we once thought we were getting out of this. Honestly, that’s probably misplaced optimism, but it’s not wrong to hope.

Until then, I think I’m going to stick to the Old Ways.

Post Scriptum

This post was written, almost done editing, and days away from being posted when I flew off on a trip to the other side of the world. That gave me some time to reflect… and also had me relying on technology that may or may not have been AI driven.

I knew I’d be using machine translation heavily going in- I was in a country where I can’t speak or read the language. I used Google Lens primarily, and it wasn’t perfect, but it was generally good enough and it was convenient to use. For the most part, I was using it to read packaging before buying things, and to figure out the occasional sign or poster. I also ran into one helpful clerk who did not speak English well enough to answer a complicated question I asked, but was able to answer by typing an answer in their native language into an app which translated it for me to read.

I’ve heard professional translators decry the rise of machine translation, in perhaps a portent of the current debate about AI. I’m still with them when it comes to professional translation for publishing- I’ve seen my share of terrible manuals, and this trip I was handed a few English menus that I’m pretty sure were translated with older tech that was worse than what my phone could do. But that’s not the use case here. This kind of real-time machine translation is a game-changer for being able to function in a foreign land. It’s been a long time since I’ve been outside of Canada, and the last time I’ve been to a non-English speaking country I think we were still using Babel Fish. I would have been utterly lost then, and I knew it. This time around, I had a lifeline, and the confidence that came with it.

I can’t speak personally to the experience of cross-language online friendships, or people who only speak a less common language being able to interact with a broader world, but I’ve seen a glimpse of the connection across boundaries that technology has promised.

Whether it’s okay to just throw an entire written work into an AI to translate and edit to post in a language you can’t write in is another question entirely, much more along the lines of the “if it can’t be done with AI, should it exist” conundrum.

One more thing: image search actually works the way we were promised by science fiction now! Well, most of the time- there were a few things it completely failed to identify. But for the most part, I could point Lens at an object and get a mostly cromulent explanation of what I was looking at. I’ve noticed this in search engines, too- it’s been improving for years, and now we have actually explainers. If nothing else, this is pretty cool to experience, in a “wow, we really are living in the future” way.

I don’t think anything in this section is groundbreaking enough to justify going full speed ahead on the crazy train, and it’s getting away from the narrower scope of “what does AI mean to creatives” a bit. But I think it does drive home my main point: that AI didn’t have to be this monster, that there are viable use cases here, that it might have been a positive development if certain people had cared a little more. At the end of the day, though, that’s not the road that was taken.